#numpy playlist

Explore tagged Tumblr posts

Text

Machine Learning Fundamentals

Machine learning (ML) is the heartbeat of artificial intelligence, enabling computers to learn patterns from data and make decisions autonomously — think of Spotify curating playlists based on your listening habits or e-commerce platforms predicting your next purchase. It’s a versatile skill applicable across industries, from finance to entertainment, and its importance is only growing as data becomes the new oil. Today, ML is trending toward integration with edge computing, allowing devices like wearables to process data locally, while innovations like federated learning promise privacy-preserving AI training. The future could see ML democratized further through automated machine learning (AutoML), enabling even non-experts to build models. The Certified Machine Learning Engineer program is your gateway into this field, offering hands-on training in supervised and unsupervised learning, algorithms like random forests and gradient boosting, and practical deployment using tools like TensorFlow. This certification validates your ability to craft ML solutions that solve real-world problems, making you a sought-after professional.

To round out your ML expertise, consider the Data Science with Python Certification, which dives into data preprocessing, exploratory analysis, and advanced ML with Python’s rich ecosystem — think Pandas, NumPy, and Scikit-learn. The AI Algorithms Specialist Certification offers a deeper dive into the math behind ML, such as linear algebra and optimization, perfect for those who love theory. For a practical twist, the ML Ops Engineer Certification teaches you to streamline ML workflows, from development to production. Together, these certifications build a robust ML portfolio. Elevate your skills further with AI CERTs��, which provides cutting-edge training in AutoML, AI ethics, and scalable ML deployment, ensuring you’re ready for both current demands and future shifts. With this combination, you’ll be a powerhouse in ML, ready to innovate and lead.

0 notes

Text

New Study Reveals 70% of Python Developers Use Virtual Environments for Efficient Coding. Dive into the Data and Enhance Your Python Skills Today!

Real Python Blog

Unlocking the Power of Python: Key Facts, Hard Information, and Concrete Data

Welcome to our blog, where we delve into the fascinating world of Python programming. In this article, we will provide you with an in-depth exploration of key facts, hard information, and concrete data that highlight the true power and versatility of Python. Brace yourself for a mind-blowing journey filled with numerical insights, objective analysis, and informative content that will leave you astounded.

1. Python's Explosive Growth in the Tech Industry

Let's start by examining the impressive growth of Python in the tech industry. According to the latest statistics, Python is the second most popular programming language globally, with a staggering 8.2 million developers actively using it. This number represents a remarkable 28% increase from the previous year, indicating the immense demand for Python in various sectors.

2. Real-World Applications of Python

Python's versatility extends far beyond its popularity in terms of sheer numbers. The language finds real-world applications in a wide range of fields, including:

Data Science: Python's rich ecosystem of libraries such as NumPy, Pandas, and Matplotlib makes it the go-to language for data analysis and visualization.

Web Development: Python frameworks like Django and Flask enable developers to build robust and scalable web applications with ease.

Artificial Intelligence and Machine Learning: Python's simplicity and extensive libraries like Tensorflow and Keras have propelled its dominance in AI and ML research.

Scripting and Automation: Python's concise syntax and powerful libraries make it ideal for automating repetitive tasks and writing efficient scripts.

3. Growth in Python-Based Technologies

Python's impact extends beyond the core language itself. Let's dive into some key Python-based technologies and their impressive growth:

3.1. Flask Framework

Flask, a lightweight and flexible Python web framework, has experienced a remarkable 50% increase in adoption rates over the past year. Its simplicity and extensibility make it a popular choice among developers for building scalable web applications.

3.2. Pandas Library

Pandas, a powerful data manipulation and analysis library, has gained significant traction in the past few years. With a remarkable 45% increase in usage, Pandas has become an indispensable tool for data scientists and analysts worldwide.

4. Python's Influence on Major Companies

Python has emerged as a language of choice for major companies across various industries. Some notable examples include:

Google: Python serves as a backbone for numerous Google services, including YouTube, Google Search, and Google App Engine.

Netflix: Python's simplicity and efficiency have made it a preferred language for Netflix's extensive data processing and recommendation systems.

Instagram: This popular social media platform utilizes Python for its backend infrastructure, supporting over 1 billion users worldwide.

Spotify: Python plays a crucial role in Spotify's music recommendation algorithms, providing users with personalized playlists and discoverability.

5. The Inherent Advantages of Python

Python offers a myriad of advantages that contribute to its popularity and continued growth:

5.1. Readability and Simplicity

Python's clean and readable syntax allows developers to express complex ideas with minimal code, fostering efficient collaboration and reducing development time.

5.2. Large and Active Community

With millions of dedicated developers worldwide, Python boasts one of the largest and most vibrant communities in the programming world. This thriving community ensures that Python remains up-to-date, constantly evolving, and supported by a vast array of libraries and frameworks.

5.3. Cross-Platform Compatibility

Python effortlessly runs on various operating systems, including Windows, macOS, and Linux. This cross-platform compatibility makes it an ideal choice for developing applications that can seamlessly transition between different environments.

5.4. Extensive Library Ecosystem

Python's extensive library ecosystem empowers developers with a vast array of pre-existing code and functionalities. This eliminates the need to reinvent the wheel, allowing developers to focus on building innovative solutions without getting bogged down in repetitive tasks.

6. RoamNook: Fueling Digital Growth

Now that you've explored the incredible world of Python, it's time to introduce you to RoamNook—an innovative technology company dedicated to fueling digital growth. With expertise in IT consultation, custom software development, and digital marketing, RoamNook stands at the forefront of driving technology advancements.

By harnessing the power of Python, RoamNook delivers cutting-edge solutions that empower businesses to thrive in the digital landscape. Whether it's developing scalable web applications, designing data-driven marketing strategies, or providing expert consultation, RoamNook has the knowledge and passion to help your business succeed.

So, why wait? Give your business the competitive edge it deserves. Reach out to RoamNook today and embark on a transformative journey towards digital growth.

Conclusion: Reflecting on the Power of Python

As we conclude this captivating exploration of Python's power, it's essential to reflect on the impact this language has on our lives and the tech industry as a whole. From its explosive growth to its unparalleled versatility, Python has solidified its position as a dominant force in programming.

By embracing Python, individuals and organizations can unlock their full potential, harnessing this language's simplicity, efficiency, and endless possibilities. So, are you ready to embark on a Pythonic journey? Are you prepared to discover the vast horizons that Python has to offer?

We invite you to join the ever-expanding Python community, leverage the power of RoamNook's expertise, and embark on a transformative digital journey. Start harnessing the power of Python today and witness firsthand the wonders it can bring to your life and career.

Related Content:

RoamNook - Fueling digital growth through innovative technology solutions

Python.org - The official website of the Python programming language

Source: https://realpython.com/python-virtual-environments-a-primer/&sa=U&ved=2ahUKEwie3put9quGAxXWFlkFHSgsAb8QFnoECAoQAg&usg=AOvVaw1uX13K4qS_KxVwz1OF1FRA

0 notes

Text

youtube

Python Numpy Tutorials

#numpy tutorials#numpy for beginners#numpy arrays#what is array in numpy#numpy full array#how to create numpy full array#what is numpy full array#how to use numpy full array#uses of numpy full array#how to define the shape of numpy array#python for beginners#python full course#numpy full course#numpy python playlist#numpy playlist#complete python numpy tutorials#numpy full array function#python array#python numpy library#how to create arrays in python numpy#Youtube

0 notes

Link

#python #NumPy is a goto library for any numerical computation including data science. Here is how you can learn NumPy - 1 Function at a time

2 notes

·

View notes

Text

Best python ide for gui

#BEST PYTHON IDE FOR GUI CODE#

PyQt applications run much better in practice from a user perspective than something like Electron. OTOH, with something like Electron, you are going through a browsery DOM with a bunch more layers of abstraction between you and the meat of what's actually happening.

#BEST PYTHON IDE FOR GUI CODE#

If you are doing normal GUI development, you are only using Python to orchestrate objects that are implemented in C++, so the actual implementation of the widgets is all native code that runs great. (But both are terrible languages for that.) If you are doing hard core number crunching or the like, Javascript is much faster than pure Python. > I bet you money electron is faster than python. I have used that combination on several commercial ventures in the past. Generally speaking, a cross-platform toolkit and a cross-platform language can get you into most places, and both Qt and Python fit that bill. What do you mean by "fast and efficient"? Python and Qt can handle user interactions in real-time, even with hundreds of controls. So it depends on what you want to use it for. Android and iOS each have their own standard languages and frameworks. If you are having to learn a toolkit either way, it's worth considering.ĭepending on your experience and intended platforms, you may prefer other language/framework combinations. I don't know as much about it, but I do know that it's quite capable. Not quite as fancy, but quite capable.Ī more recent toolkit is kivy. There's also wxWidgets, which is fine too. PySide provides convenient, reliable interfaces to Qt within Python.Qt is very powerful with basically everything you could ever think of in a toolkit (and a bunch more stuff you'll never use).My employer uses Qt through PySide and it's great. Introduction to Programming with Python (from Microsoft Virtual Academy)./r/git and /r/mercurial - don't forget to put your code in a repo!./r/pyladies (women developers who love python)./r/coolgithubprojects (filtered on Python projects)./r/pystats (python in statistical analysis and machine learning)./r/inventwithpython (for the books written by /u/AlSweigart)./r/pygame (a set of modules designed for writing games)./r/django (web framework for perfectionists with deadlines)./r/pythoncoding (strict moderation policy for 'programming only' articles).NumPy & SciPy (Scientific computing) & Pandas.Transcrypt (Hi res SVG using Python 3.6 and turtle module).Brython (Python 3 implementation for client-side web programming).PythonAnywhere (basic accounts are free).(Evolved from the language-agnostic parts of IPython, Python 3).The Python Challenge (solve each level through programming).Problem Solving with Algorithms and Data Structures.Udemy Tech Youtube channel - Python playlist Invent Your Own Computer Games with Pythonįive life jackets to throw to the new coder (things to do after getting a handle on python) Please use the flair selector to choose your topic.Īdd 4 extra spaces before each line of code def fibonacci(): Reddit filters them out, so your post or comment will be lost. If you are about to ask a "how do I do this in python" question, please try r/learnpython, the Python discord, or the #python IRC channel on Libera.chat. 12pm UTC – 2pm UTC: Bringing ML Models into Production Bootcamp News about the dynamic, interpreted, interactive, object-oriented, extensible programming language Python Current Events

0 notes

Text

Randomly ketemu profesor dari India di linkedin, terus kan gue dm tuh si profesor, karena kagak paham dia profesor, gue panggil dia pake nama langsung awalnya haha. Abis sapa sapaan di linkedin, dia nawarin internship. Gue isi dah tuh formnya. Internshipnya ini agendanya ngerjain tugas daily sama weekly tentang python gitu. Abis gue daptar, gue diemail kalo gue diterima.

Terus dia ngasih tau metode intern nya kaya gimana, jadi dia udah provide video gitu di yutub ada sebuah playlist machine learning berisi 79 video soal numpy, pandas, scikit, gitu2. Dia gak provide link colab ataupun github buat sintax yang di yutub itu, jadi tugas gue tiap hari adalah menyalin sintax nya ke python dan bikin catatan terus di email tiap hari ke profesornya. As simple as that, tapi jujurly metode ini keren banget menurut gue sebagai seseorang yang males belajar kalo ga ada trigger. Ini membantu banget buat gue bisa konsisten belajar tiap hari gitu lho huhu. Baiknya, si profesor ini ngasih ini cuma2, terus orangnya responsif, apresiatif, duh bae banget dah pokoknya. Aku padamu negeri prindapan. Dia tiap abis gue ngumpulin tugas nge chat gue.

“you’re doing a good work dwi, keep it up” gitu

trus dia juga bilang gini

“Free education is abundant, all over the internet. It’s the desire to learn that scarce”

Mungkin berangkat dari situ lah dia membikin internship ini, karena kelangkaan minat belajar. Jadi dia ngga cuma bikin konten di yutub doang, karena dia sadar udah banyak yang bikin.

Jujurly metode belajar berbayar pun ga mempan di gue, meski udah beli di udemy, ato mungkin bootcamp sekalipun, kalo metodenya kurang pas gue akan tetep males. Gue butuh dipaksa untuk ngerjain tiap hari, dan gue seneng banget ketemu nih prof nirmal negeri prindapan.

1 note

·

View note

Text

Machine Learning

https://youtube.com/playlist?list=PLCmAM0wpuQ4910msQ5Bs8txh2LqvWLV4R

Python Tkinter Library

https://youtube.com/playlist?list=PLCmAM0wpuQ49Mgfe1wz8tCQRh3NMWtHhq

Java Full course

https://youtube.com/playlist?list=PLCmAM0wpuQ48HV8ph_oKf1haNpntsFGAr

Pandas Full cours

https://youtube.com/playlist?list=PLCmAM0wpuQ4-vdg-JtGgRm0rgMC1QnvH8

WordPress full course

https://youtube.com/playlist?list=PLCmAM0wpuQ49HpucvtT7dMe7L2X2BBsAO

Numpy

https://youtube.com/playlist?list=PLCmAM0wpuQ4_82zMt05FNLMrWaGLLP-4H

String function in c program

https://youtube.com/playlist?list=PLCmAM0wpuQ4-4iQsIovYPxY-lDc714aln

Matplotlib data visualization

https://youtube.com/playlist?list=PLCmAM0wpuQ49cqfUZRBP-nOQBeCqLKxZ5

Python

https://youtube.com/playlist?list=PLCmAM0wpuQ484UV_lo5l_F0IZLdk1H4z4

Ethical hacking

https://youtube.com/playlist?list=PLCmAM0wpuQ48Oet9rkIItDkDjhzpq8fLD

Subscribe my youtube channel and press bell icon to get instant notification when we will upload video

#programming #SmartCoding #machinelearning #bestprogramever #video

0 notes

Text

My Programming Journey: Understanding Music Genres with Machine Learning

Artificial Intelligence is used everyday, by regular people and businesses, creating such a positive impact in all kinds of industries and fields that it makes me think that AI is only here to stay and grow, and help society grow with it. AI has evolved considerably in the last decade, currently being able to do things that seem taken out of a Sci-Fi movie, like driving cars, recognizing faces and words (written and spoken), and music genres.

While Music is definitely not the most profitable application of Machine Learning, it has benefited tremendously from Deep Learning and other ML applications. The potential AI possess in the music industry includes automating services and discovering insights and patterns to classify and/or recommend music.

We can be witnesses to this potential when we go to our preferred music streaming service (such as Spotify or Apple Music) and, based on the songs we listen to or the ones we’ve previously saved, we are given playlists of similar songs that we might also like.

Machine Learning’s ability of recognition isn’t just limited to faces or words, but it can also recognize instruments used in music. Music source separation is also a thing, where a song is taken and its original signals are separated from a mixture audio signal. We can also call this Feature Extraction and it is popularly used nowadays to aid throughout the cycle of music from composition and recording to production. All of this is doable thanks to a subfield of Music Machine Learning: Music Information Retrieval (MIR). MIR is needed for almost all applications related to Music Machine Learning. We’ll dive a bit deeper on this subfield.

Music Information Retrieval

Music Information Retrieval (MIR) is an interdisciplinary field of Computer Science, Musicology, Statistics, Signal Processing, among others; the information within music is not as simple as it looks like. MIR is used to categorize, manipulate and even create music. This is done by audio analysis, which includes pitch detection, instrument identification and extraction of harmonic, rhythmic and/or melodic information. Plain information can be easily comprehended (such as tempo (beats per minute), melody, timbre, etc.) and easily calculated through different genres. However, many music concepts considered by humans can’t be perfectly modeled to this day, given there are many factors outside music that play a role in its perception.

Getting Started

I wanted to try something more of a challenge for this post, so I am attempting to Visualize and Classify audio data using the famous GTZAN Dataset to perform an in depth analysis of sound and understand what features we can visualize/extract from this kind of data. This dataset consists of: · A collection of 10 genres with 100 audio (WAV) files each, each having a length of 30 seconds. This collection is stored in a folder called “genres_original”. · A visual representation for each audio file stored in a folder called “images_original”. The audio files were converted to Mel Spectrograms (later explained) to make them able to be classified through neural networks, which take in image representation. · 2 CVS files that contain features of the audio files. One file has a mean and variance computed over multiple features for each song (full length of 30 seconds). The second CVS file contains the same songs but split before into 3 seconds, multiplying the data times 10. For this project, I am yet again coding in Visual Studio Code. On my last project I used the Command Line from Anaconda (which is basically the same one from Windows with the python environment set up), however, for this project I need to visualize audio data and these representations can’t be done in CLI, so I will be running my code from Jupyter Lab, from Anaconda Navigator. Jupyter Lab is a web-based interactive development environment for Jupyter notebooks (documents that combine live runnable code with narrative text, equations, images and other interactive visualizations). If you haven’t installed Anaconda Navigator already, you can find the installation steps on my previous blog post. I would quickly like to mention that Tumblr has a limit of 10 images per post, and this is a lengthy project so I’ll paste the code here instead of uploading code screenshots, and only post the images of the outputs. The libraries we will be using are:

> pandas: a data analysis and manipulation library.

> numpy: to work with arrays.

> seaborn: to visualize statistical data based on matplolib.

> matplotlib.pyplot: a collection of functions to create static, animated and interactive visualizations.

> Sklearn: provides various tools for model fitting, data preprocessing, model selection and evaluation, among others.

· naive_bayes

· linear_model

· neighbors

· tree

· ensemble

· svm

· neural_network

· metrics

· preprocessing

· decomposition

· model_selection

· feature_selection

> librosa: for music and audio analysis to create MIR systems.

· display

> IPython: interactive Python

· display import Audio

> os: module to provide functions for interacting with the operating system.

> xgboost: gradient boosting library

· XGBClassifier, XGBRFClassifier

· plot_tree, plot_importance

> tensorflow:

· Keras

· Sequential and layers

Exploring Audio Data

Sounds are pressure waves, which can be represented by numbers over a time period. We first need to understand our audio data to see how it looks. Let’s begin with importing the libraries and loading the data:

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import sklearn

import librosa

import librosa.display

import IPython.display as ipd

from IPython.display import Audio

import os

from sklearn.naive_bayes import GaussianNB

from sklearn.linear_model import SGDClassifier, LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import SVC

from sklearn.neural_network import MLPClassifier

from xgboost import XGBClassifier, XGBRFClassifier

from xgboost import plot_tree, plot_importance

from sklearn.metrics import confusion_matrix, accuracy_score, roc_auc_score, roc_curve

from sklearn import preprocessing

from sklearn.decomposition import PCA

from sklearn.model_selection import train_test_split

from sklearn.feature_selection import RFE

from tensorflow.keras import Sequential

from tensorflow.keras.layers import *

import warnings

warnings.filterwarnings('ignore')

# Loading the data

general_path = 'C:/Users/807930/Documents/Spring 2021/Emerging Trends in Technology/MusicGenre/input/gtzan-database-music-genre-classification/Data'

Now let’s load one of the files (I chose Hit Me Baby One More Time by Britney Spears):

print(list(os.listdir(f'{general_path}/genres_original/')))

#Importing 1 file to explore how our Audio Data looks.

y, sr = librosa.load(f'{general_path}/genres_original/pop/pop.00019.wav')

#Playing the audio

ipd.display(ipd.Audio(y, rate=sr, autoplay=True))

print('Sound (Sequence of vibrations):', y, '\n')

print('Sound shape:', np.shape(y), '\n')

print('Sample Rate (KHz):', sr, '\n')

# Verify length of the audio

print('Check Length of Audio:', 661794/22050)

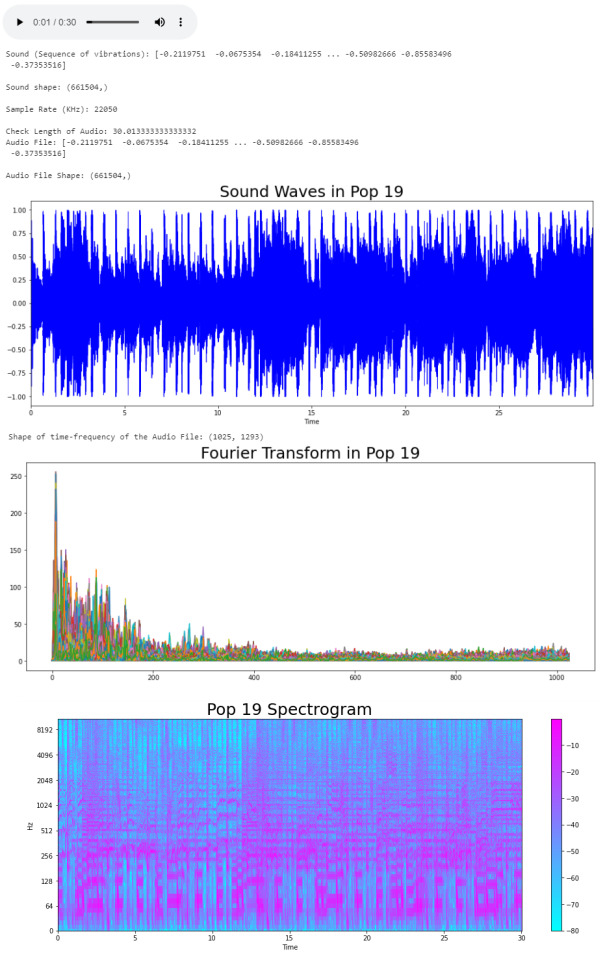

We took the song and using the load function from the librosa library, we got an array of the audio time series (sound) and the sample rate of sound. The length of the audio is 30 seconds. Now we can trim our audio to remove the silence between songs and use the librosa.display.waveplot function to plot the audio file into a waveform. > Waveform: The waveform of an audio signal is the shape of its graph as a function of time.

# Trim silence before and after the actual audio

audio_file, _ = librosa.effects.trim(y)

print('Audio File:', audio_file, '\n')

print('Audio File Shape:', np.shape(audio_file))

#Sound Waves 2D Representation

plt.figure(figsize = (16, 6))

librosa.display.waveplot(y = audio_file, sr = sr, color = "b");

plt.title("Sound Waves in Pop 19", fontsize = 25);

After having represented the audio visually, we will plot a Fourier Transform (D) from the frequencies and amplitudes of the audio data. > Fourier Transform: A mathematical function that maps the frequency and phase content of local sections of a signal as it changes over time. This means that it takes a time-based pattern (in this case, a waveform) and retrieves the complex valued function of frequency, as a sine wave. The signal is converted into individual spectral components and provides frequency information about the signal.

#Default Fast Fourier Transforms (FFT)

n_fft = 2048 # window size

hop_length = 512 # number audio of frames between STFT columns

# Short-time Fourier transform (STFT)

D = np.abs(librosa.stft(audio_file, n_fft = n_fft, hop_length = hop_length))

print('Shape of time-frequency of the Audio File:', np.shape(D))

plt.figure(figsize = (16, 6))

plt.plot(D);

plt.title("Fourier Transform in Pop 19", fontsize = 25);

The Fourier Transform only gives us information about the frequency values and now we need a visual representation of the frequencies of the audio signal so we can calculate more audio features for our system. To do this we will plot the previous Fourier Transform (D) into a Spectrogram (DB). > Spectrogram: A visual representation of the spectrum of frequencies of a signal as it varies with time.

DB = librosa.amplitude_to_db(D, ref = np.max)

# Creating the Spectrogram

plt.figure(figsize = (16, 6))

librosa.display.specshow(DB, sr = sr, hop_length = hop_length, x_axis = 'time', y_axis = 'log'

cmap = 'cool')

plt.colorbar();

plt.title("Pop 19 Spectrogram", fontsize = 25);

The output:

Audio Features

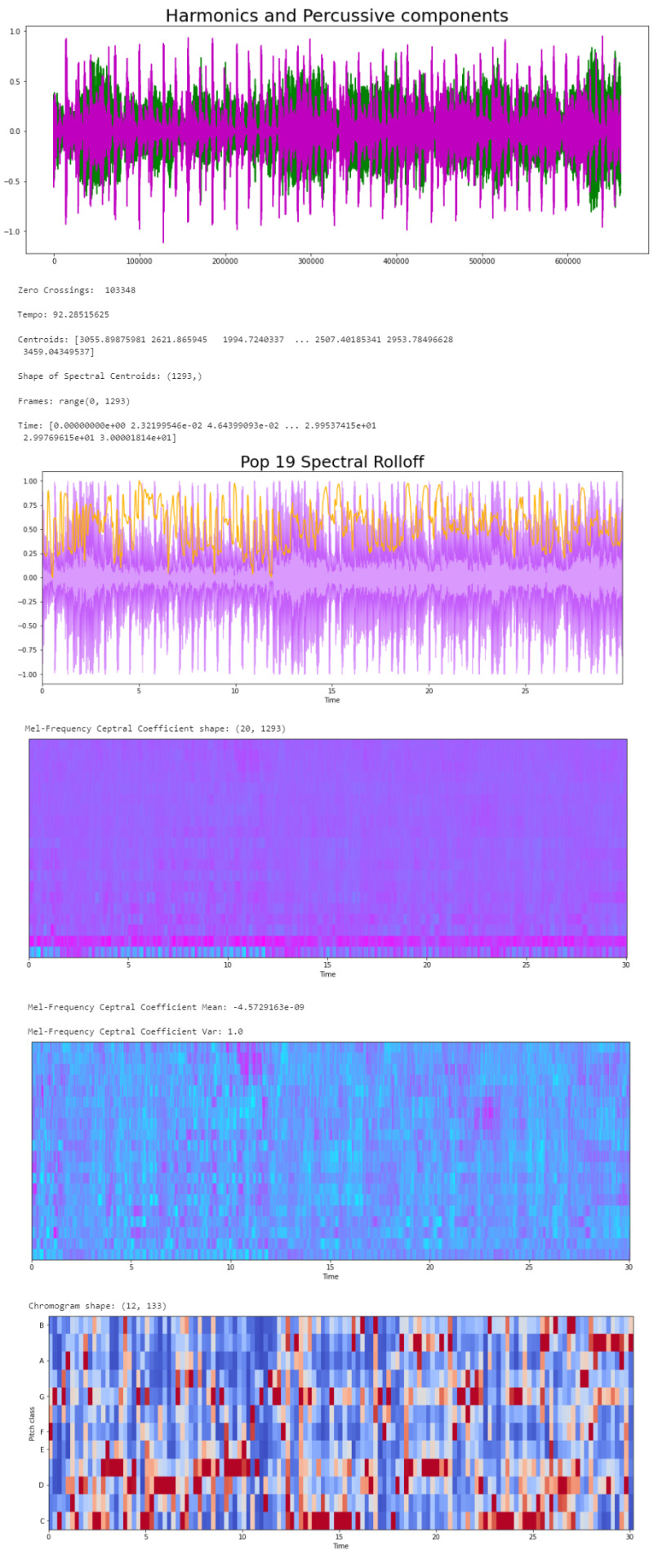

Now that we know what the audio data looks like to python, we can proceed to extract the Audio Features. The features we will need to extract, based on the provided CSV, are: · Harmonics · Percussion · Zero Crossing Rate · Tempo · Spectral Centroid · Spectral Rollof · Mel-Frequency Cepstral Coefficients · Chroma Frequencies Let’s start with the Harmonics and Percussive components:

# Decompose the Harmonics and Percussive components and show Representation

y_harm, y_perc = librosa.effects.hpss(audio_file)

plt.figure(figsize = (16, 6))

plt.plot(y_harm, color = 'g');

plt.plot(y_perc, color = 'm');

plt.title("Harmonics and Percussive components", fontsize = 25);

Using the librosa.effects.hpss function, we are able to separate the harmonics and percussive elements from the audio source and plot it into a visual representation.

Now we can retrieve the Zero Crossing Rate, using the librosa.zero_crossings function.

> Zero Crossing Rate: The rate of sign-changes (the number of times the signal changes value) of the audio signal during the frame.

#Total number of zero crossings

zero_crossings = librosa.zero_crossings(audio_file, pad=False)

print(sum(zero_crossings))

The Tempo (Beats per Minute) can be retrieved using the librosa.beat.beat_track function.

# Retrieving the Tempo in Pop 19

tempo, _ = librosa.beat.beat_track(y, sr = sr)

print('Tempo:', tempo , '\n')

The next feature extracted is the Spectral Centroids. > Spectral Centroid: a measure used in digital signal processing to characterize a spectrum. It determines the frequency area around which most of the signal energy concentrates.

# Calculate the Spectral Centroids

spectral_centroids = librosa.feature.spectral_centroid(audio_file, sr=sr)[0]

print('Centroids:', spectral_centroids, '\n')

print('Shape of Spectral Centroids:', spectral_centroids.shape, '\n')

# Computing the time variable for visualization

frames = range(len(spectral_centroids))

# Converts frame counts to time (seconds)

t = librosa.frames_to_time(frames)

print('Frames:', frames, '\n')

print('Time:', t)

Now that we have the shape of the spectral centroids as an array and the time variable (from frame counts), we need to create a function that normalizes the data. Normalization is a technique used to adjust the volume of audio files to a standard level which allows the file to be processed clearly. Once it’s normalized we proceed to retrieve the Spectral Rolloff.

> Spectral Rolloff: the frequency under which the cutoff of the total energy of the spectrum is contained, used to distinguish between sounds. The measure of the shape of the signal.

# Function that normalizes the Sound Data

def normalize(x, axis=0):

return sklearn.preprocessing.minmax_scale(x, axis=axis)

# Spectral RollOff Vector

spectral_rolloff = librosa.feature.spectral_rolloff(audio_file, sr=sr)[0]

plt.figure(figsize = (16, 6))

librosa.display.waveplot(audio_file, sr=sr, alpha=0.4, color = '#A300F9');

plt.plot(t, normalize(spectral_rolloff), color='#FFB100');

Using the audio file, we can continue to get the Mel-Frequency Cepstral Coefficients, which are a set of 20 features. In Music Information Retrieval, it’s often used to describe timbre. We will employ the librosa.feature.mfcc function.

mfccs = librosa.feature.mfcc(audio_file, sr=sr)

print('Mel-Frequency Ceptral Coefficient shape:', mfccs.shape)

#Displaying the Mel-Frequency Cepstral Coefficients:

plt.figure(figsize = (16, 6))

librosa.display.specshow(mfccs, sr=sr, x_axis='time', cmap = 'cool');

The MFCC shape is (20, 1,293), which means that the librosa.feature.mfcc function computed 20 coefficients over 1,293 frames.

mfccs = sklearn.preprocessing.scale(mfccs, axis=1)

print('Mean:', mfccs.mean(), '\n')

print('Var:', mfccs.var())

plt.figure(figsize = (16, 6))

librosa.display.specshow(mfccs, sr=sr, x_axis='time', cmap = 'cool');

Now we retrieve the Chroma Frequencies, using librosa.feature.chroma_stft. > Chroma Frequencies (or Features): are a powerful tool for analyzing music by categorizing pitches. These features capture harmonic and melodic characteristics of music.

# Increase or decrease hop_length to change how granular you want your data to be

hop_length = 5000

# Chromogram

chromagram = librosa.feature.chroma_stft(audio_file, sr=sr, hop_length=hop_length)

print('Chromogram shape:', chromagram.shape)

plt.figure(figsize=(16, 6))

librosa.display.specshow(chromagram, x_axis='time', y_axis='chroma', hop_length=hop_length, cmap='coolwarm');

The output:

Exploratory Data Analysis

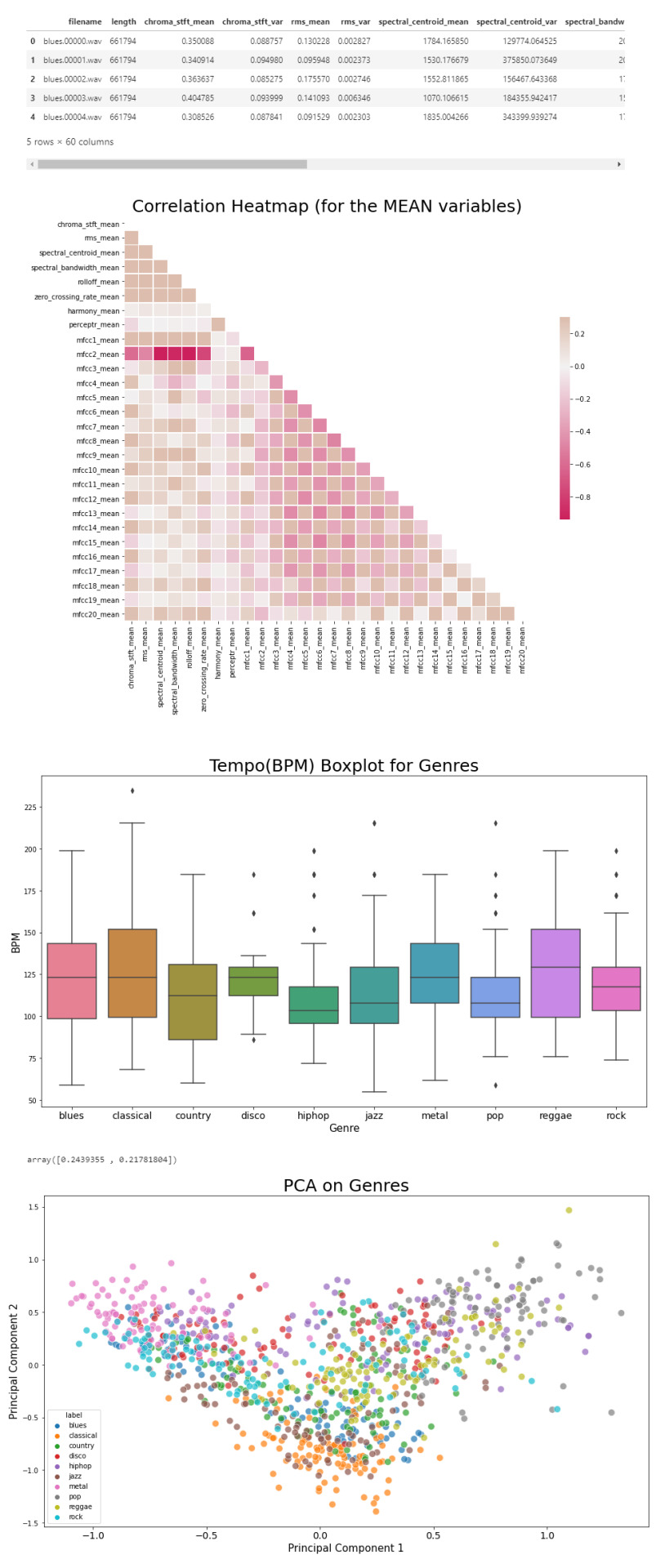

Now that we have a visual understanding of what an audio file looks like, and we’ve explored a good set of features, we can perform EDA, or Exploratory Data Analysis. This is all about getting to know the data and data profiling, summarizing the dataset through descriptive statistics. We can do this by getting a description of the data, using the describe() function or head() function. The describe() function will give us a description of all the dataset rows, and the head() function will give us the written data. We will perform EDA on the csv file, which contains all of the features previously analyzed above, and use the head() function:

# Loading the CSV file

data = pd.read_csv(f'{general_path}/features_30_sec.csv')

data.head()

Now we can create the correlation matrix of the data found in the csv file, using the feature means (average). We do this to summarize our data and pass it into a Correlation Heatmap.

# Computing the Correlation Matrix

spike_cols = [col for col in data.columns if 'mean' in col]

corr = data[spike_cols].corr()

The corr() function finds a pairwise correlation of all columns, excluding non-numeric and null values.

Now we can plot the heatmap:

# Generate a mask for the upper triangle

mask = np.triu(np.ones_like(corr, dtype=np.bool))

# Set up the matplotlib figure

f, ax = plt.subplots(figsize=(16, 11));

# Generate a custom diverging colormap

cmap = sns.diverging_palette(0, 25, as_cmap=True, s = 90, l = 45, n = 5)

# Draw the heatmap with the mask and correct aspect ratio

sns.heatmap(corr, mask=mask, cmap=cmap, vmax=.3, center=0,

square=True, linewidths=.5, cbar_kws={"shrink": .5}

plt.title('Correlation Heatmap (for the MEAN variables)', fontsize = 25)

plt.xticks(fontsize = 10)

plt.yticks(fontsize = 10);

Now we will take the data and, extracting the label(genre) and the tempo, we will draw a Box Plot. Box Plots visually show the distribution of numerical data through displaying percentiles and averages.

# Setting the axis for the box plot

x = data[["label", "tempo"]]

f, ax = plt.subplots(figsize=(16, 9));

sns.boxplot(x = "label", y = "tempo", data = x, palette = 'husl');

plt.title('Tempo(BPM) Boxplot for Genres', fontsize = 25)

plt.xticks(fontsize = 14)

plt.yticks(fontsize = 10);

plt.xlabel("Genre", fontsize = 15)

plt.ylabel("BPM", fontsize = 15)

Now we will draw a Scatter Diagram. To do this, we need to visualize possible groups of genres:

# To visualize possible groups of genres

data = data.iloc[0:, 1:]

y = data['label']

X = data.loc[:, data.columns != 'label']

We use data.iloc to get rows and columns at integer locations, and data.loc to get rows and columns with particular labels, excluding the label column. The next step is to normalize our data:

# Normalization

cols = X.columns

min_max_scaler = preprocessing.MinMaxScaler()

np_scaled = min_max_scaler.fit_transform(X)

X = pd.DataFrame(np_scaled, columns = cols)

Using the preprocessing library, we rescale each feature to a given range. Then we add a fit to data and transform (fit_transform).

We can proceed with a Principal Component Analysis:

# Principal Component Analysis

pca = PCA(n_components=2)

principalComponents = pca.fit_transform(X)

principalDf = pd.DataFrame(data = principalComponents, columns = ['principal component 1', 'principal component 2'])

# concatenate with target label

finalDf = pd.concat([principalDf, y], axis = 1)

PCA is used to reduce dimensionality in data. The fit learns some quantities from the data. Before the fit transform, the data shape was [1000, 58], meaning there’s 1000 rows with 58 columns (in the CSV file there’s 60 columns but two of these are string values, so it leaves with 58 numeric columns).

Once we use the PCA function, and set the components number to 2 we reduce the dimension of our project from 58 to 2. We have found the optimal stretch and rotation in our 58-dimension space to see the layout in two dimensions.

After reducing the dimensional space, we lose some variance(information).

pca.explained_variance_ratio_

By using this attribute we get the explained variance ratio, which we sum to get the percentage. In this case the variance explained is 46.53% .

plt.figure(figsize = (16, 9))

sns.scatterplot(x = "principal component 1", y = "principal component 2", data = finalDf, hue = "label", alpha = 0.7,

s = 100);

plt.title('PCA on Genres', fontsize = 25)

plt.xticks(fontsize = 14)

plt.yticks(fontsize = 10);

plt.xlabel("Principal Component 1", fontsize = 15)

plt.ylabel("Principal Component 2", fontsize = 15)

plt.savefig("PCA Scattert.jpg")

The output:

Genre Classification

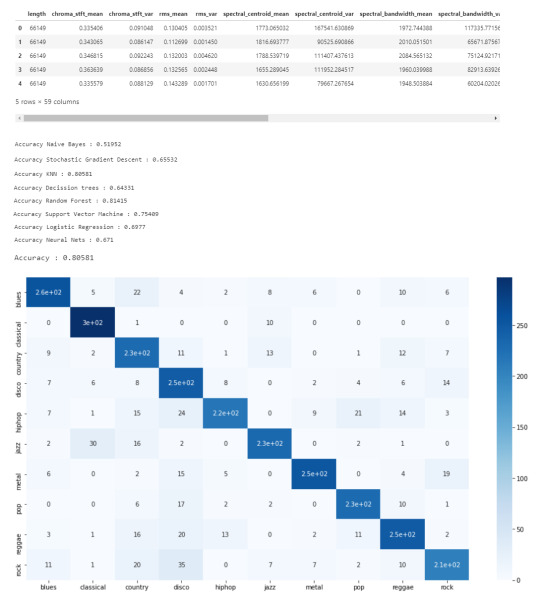

Now we know what our data looks like, the features it has and have analyzed the principal component on all genres. All we have left to do is to build a classifier model that will predict any new audio data input its genre. We will use the CSV with 10 times the data for this.

# Load the data

data = pd.read_csv(f'{general_path}/features_3_sec.csv')

data = data.iloc[0:, 1:]

data.head()

Once again visualizing and normalizing the data.

y = data['label'] # genre variable.

X = data.loc[:, data.columns != 'label'] #select all columns but not the labels

# Normalization

cols = X.columns

min_max_scaler = preprocessing.MinMaxScaler()

np_scaled = min_max_scaler.fit_transform(X)

# new data frame with the new scaled data.

X = pd.DataFrame(np_scaled, columns = cols)

Now we have to split the data for training. Like I did in my previous post, the proportions are (70:30). 70% of the data will be used for training and 30% of the data will be used for testing.

# Split the data for training

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

I tested 7 algorithms but I decided to go with K Nearest-Neighbors because I had previously used it.

knn = KNeighborsClassifier(n_neighbors=19)

knn.fit(X_train, y_train)

preds = knn.predict(X_test)

print('Accuracy', ':', round(accuracy_score(y_test, preds), 5), '\n')

# Confusion Matrix

confusion_matr = confusion_matrix(y_test, preds) #normalize = 'true'

plt.figure(figsize = (16, 9))

sns.heatmap(confusion_matr, cmap="Blues", annot=True,

xticklabels = ["blues", "classical", "country", "disco", "hiphop", "jazz", "metal", "pop", "reggae", "rock"],

yticklabels=["blues", "classical", "country", "disco", "hiphop", "jazz", "metal", "pop", "reggae", "rock"]);

The output:

youtube

References

· https://medium.com/@james_52456/machine-learning-and-the-future-of-music-an-era-of-ml-artists-9be5ef27b83e

· https://www.kaggle.com/andradaolteanu/work-w-audio-data-visualise-classify-recommend/

· https://www.kaggle.com/dapy15/music-genre-classification/notebook

· https://towardsdatascience.com/how-to-start-implementing-machine-learning-to-music-4bd2edccce1f

· https://en.wikipedia.org/wiki/Music_information_retrieval

· https://pandas.pydata.org

· https://scikit-learn.org/

· https://seaborn.pydata.org

· https://matplotlib.org

· https://librosa.org/doc/main/index.html

· https://github.com/dmlc/xgboost

· https://docs.python.org/3/library/os.html

· https://www.tensorflow.org/

· https://www.hindawi.com/journals/sp/2021/1651560/

0 notes

Text

Anjuum Khanna – Top 3 Machine Learning Projects for Beginners

Anjuum Khanna – In the IT sector, it is more helpful to work on practical projects than theoretical knowledge. It is important to get theoretical knowledge, but in the end, this knowledge we will apply in our projects. Working on real world projects helps us with how the algorithm works, if we made a slight change to this code how it would affect the projects.

In this blog post, you will discover how beginners like you can gain incredible progress in applying Machine learning to real-world problems with these awesome machine learning projects for beginners recommended by Anjuum Khanna.

Top 3 machine learning projects for beginners that cover the core aspects of machine learning such as regression, unsupervised learning. In all these machine learning projects you will start with real world datasets that are freely accessible.

Top 3 Machine Learning Projects for Beginners

1) Sales Forecasting using Walmart Dataset

This project is available on github, created by Gagandeep Singh Khanju, This is a Regression based modelling project to forecast the sales of Walmart. This project was created on Jupyter notebook, and for this project he used the “Walmart Store Sales Forecasting” dataset, which was available on Kaggle. Walmart is probably the biggest retailer worldwide and it is significant for them to have precise conjectures for their deals in different departments. Since there can be numerous components that can influence the deals for each division, it becomes basic that he distinguish the key factors that have an impact in driving the deals and use them to build up a model that can help in estimating the deals with some exactness. According to him “ In this project, he conducted multiple linear regression to predict the future sales. There were several different factors that he analyzed in his regression model starting with a full model with all the variables and then moving towards a reduced model by eliminating insignificant variables. He used several different exploratory analyses to identify the key variables for his regression equation such as correlation plots, heatmaps, histograms etc.”

2) BigMart Sales Predictions

This project is available on github, created by Gurudev Aradhye. This is a Regression based modelling project which can be tried to solve using two approaches XGBoost with hypertunning and Random forest with hypertunning. This project was created on Jupyter notebook, packages which he used in the project are pandas, numpy, sklearn, matplotlib and for this project you can use the “BigMart Sales predictions” dataset, which was available on Kaggle. According to him, “These two algorithms had their own importance and uses. The XGBoost is used in many competitions. Here hypertunning is performed with Greedy Search which initially takes some initial parameter values then it will search for parameter values which increases the accuracy of the model. Some details about problems are, The goal is to find item sales at Outlet of different types & located at different locations, It includes tasks such as data visualization, cleaning and transformation, feature engineering.

3) Music Recommendation system

This project is available on Github, created by Sarath Sattiraju. This is a simple Music Recommendation System based on an unsupervised learning system which analyses multiple users playlists and gives recommendations for a particular playlist of a user. This model is a user-to-user based recommendation system. The dataset considered for this project is the music analysis dataset FMA. This project was created on Jupyter notebook, Clustering algorithms were used to provide predictions for the data. Recommendations were given based on the frequent genre, frequent artist, top 10 songs.

About Anjuum Khanna, Tech Blogger

Anjuum Khanna a strategic leader with a proven track record of over 19 years in spread heading profitable ventures within Fintech, eCom Startups, BPOs, Telecom & D2H, spearheaded domestic & Global Business Operations with large team sizes. Championed change management & enterprise wise automation initiatives within organizations in India & Middle East. Presently working as Vice President at Mswipe Technologies.

Explore more Blogs:

Anjuum Khanna – How Robotics, AI and Automation Are shaping the future of World

Anjuum Khanna – 4 AI Projects That Cover All Basics

Anjuum Khanna – Top 5 most popular machine learning tools

visit : https://anjuumkhanna.in/

#anjuum khanna blogs#anjuum khanna tech blogger#anjuum khanna consultant#anjuum khanna#anjuum khanna machine learning tools#anjuum khanna machine learning projects

0 notes

Text

machine learning study resources from ocdevel by Tyler Renelle

basic algorithms

Tour of Machine Learning Algorithms - link

The Master Algorithm - book

math

KhanAcademy:

Either LinAlg course:medium OR Fast.ai course:medium

Stats course:medium

Calc course:medium

Books

Introduction to Linear Algebra book:hard

All of statistics book:hard

Calculus book:hard

Audio (supplementary material)

Statistics, Probability audio|course:hard

Calculus 1, 2, 3 audio|course:hard

Mathematical Decision Making audio|course:hard course on "Operations Research", similar to ML

Information Theory audio|course:hard

deep learining

Resources

Deep Learning Simplified video:easy quick series to get a lay-of-the-land.

TensorFlow Tutorials tutorial:medium

Fast.ai course:medium practical DL for coders

Hands-On Machine Learning with Scikit-Learn and TensorFlow book:medium

Deep Learning Book (Free HTML version) book:hard comprehensive DL bible; highly mathematical

Languages & Frameworks

Resources

Python book:medium

Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython 2nd Edition book:easy

TensorFlow Tutorials tutorial:medium

Hands-On Machine Learning with Scikit-Learn and TensorFlow book:medium

Checkpoint

45m/d ML

Coursera course:hard

Python book:medium

Deep Learning Resources

15m/d Math (KhanAcademy)

Either LinAlg course:medium OR Fast.ai course:medium

Stats course:medium

Calc course:medium

Audio

The Master Algorithm audio:medium Semi-technical overview of ML basics & main algorithms

Mathematical Decision Making audio|course:hard course on "Operations Research", similar to ML

Statistics, Probability audio|course:hard

Calculus 1, 2, 3 audio|course:hard

Shallow Algos 1

Resources

Tour of Machine Learning Algorithms article:easy

Elements of Statistical Learning book:hard

Pattern Recognition and Machine Learning (Free PDF?) book:hard

Hands-On Machine Learning with Scikit-Learn and TensorFlow book:medium (replaced R book)

Which algo to use?

Pros/cons table for algos picture

Decision tree of algos picture

Mathematical Decision Making audio|course:hard course on "Operations Research", similar to ML

Consciousness

Resources

Philosophy of Mind: Brains, Consciousness, and Thinking Machines (Audible, TGC) audio:easy

Natural Language Processing 1

Resources

Speech and Language Processing book:hard comprehensive classical-NLP bible

Stanford NLP YouTube course|audio:medium If offline, skip to the Deep NLP playlist (see tweet).

NLTK Book book:medium

Deep NLP 1

Resources

Overview Articles:

Stanford cs224n: Deep NLP course:medium (replaces cs224d)

TensorFlow Tutorials tutorial:medium (start at Word2Vec + next 2 pages)

Deep Learning Resources

Unreasonable Effectiveness of RNNs article:easy

Deep Learning, NLP, and Representations article:medium

Understanding LSTM Networks article:medium

Deep Learning Book (Free HTML version) book:hard comprehensive DL bible; highly mathematical

Fast.ai course:medium practical DL for coders

Convolutional Neural Networks

Resources

Stanford cs231n: Convnets course:medium

Reinforcement Learning Intro

Resources

AI a Modern Approach. Website, Book book:hard

Berkeley cs294: Deep Reinforcement Learning course:hard

RL Course by David Silver course|audio:hard

0 notes

Text

Python Numpy Tutorials

#numpy tutorials#numpy for beginners#numpy arrays#what is array in numpy#numpy full array#how to create numpy full array#what is numpy full array#how to use numpy full array#uses of numpy full array#how to define the shape of numpy array#python for beginners#python full course#numpy full course#numpy python playlist#numpy playlist#complete python numpy tutorials#numpy full array function#python array#python numpy library#how to create arrays in python numpy

0 notes

Link

Businesses and organizations are increasingly using video and audio content for a variety of functions, such as advertising, customer service, media post-production, employee training, and education. As the volume of multimedia content generated by these activities proliferates, businesses are demanding high-quality transcripts of video and audio to organize files, enable text queries, and improve accessibility to audiences who are deaf or hard of hearing (466 million with disabling hearing loss worldwide) or language learners (1.5 billion English language learners worldwide).

Traditional speech-to-text transcription methods typically involve manual, time-consuming, and expensive human labor. Powered by machine learning (ML), Amazon Transcribe is a speech-to-text service that delivers high-quality, low-cost, and timely transcripts for business use cases and developer applications. In the case of transcribing domain-specific terminologies in fields such as legal, financial, construction, higher education, or engineering, the custom vocabularies feature can improve transcription quality. To use this feature, you create a list of domain-specific terms and reference that vocabulary file when running transcription jobs.

This post shows you how to use Amazon Augmented AI (Amazon A2I) to help generate this list of domain-specific terms by sending low-confidence predictions from Amazon Transcribe to humans for review. We measure the word error rate (WER) of transcriptions and number of correctly-transcribed terms to demonstrate how to use custom vocabularies to improve transcription of domain-specific terms in Amazon Transcribe.

To complete this use case, use the notebook A2I-Video-Transcription-with-Amazon-Transcribe.ipynb on the Amazon A2I Sample Jupyter Notebook GitHub repo.

Example of mis-transcribed annotation of the technical term, “an EC2 instance”. This term was transcribed as “Annecy two instance”.

Example of correctly transcribed annotation of the technical term “an EC2 instance” after using Amazon A2I to build an Amazon Transcribe custom vocabulary and re-transcribing the video.

This walkthrough focuses on transcribing video content. You can modify the code provided to use audio files (such as MP3 files) by doing the following:

Upload audio files to your Amazon Simple Storage Service (Amazon S3) bucket and using them in place of the video files provided.

Modify the button text and instructions in the worker task template provided in this walkthrough and tell workers to listen to and transcribe audio clips.

Solution overview

The following diagram presents the solution architecture.

We briefly outline the steps of the workflow as follows:

Perform initial transcription. You transcribe a video about Amazon SageMaker, which contains multiple mentions of technical ML and AWS terms. When using Amazon Transcribe out of the box, you may find that some of these technical mentions are mis-transcribed. You generate a distribution of confidence scores to see the number of terms that Amazon Transcribe has difficulty transcribing.

Create human review workflows with Amazon A2I. After you identify words with low-confidence scores, you can send them to a human to review and transcribe using Amazon A2I. You can make yourself a worker on your own private Amazon A2I work team and send the human review task to yourself so you can preview the worker UI and tools used to review video clips.

Build custom vocabularies using A2I results. You can parse the human-transcribed results collected from Amazon A2I to extract domain-specific terms and use these terms to create a custom vocabulary table.

Improve transcription using custom vocabulary. After you generate a custom vocabulary, you can call Amazon Transcribe again to get improved transcription results. You evaluate and compare the before and after performances using an industry standard called word error rate (WER).

Prerequisites

Before beginning, you need the following:

An AWS account.

An S3 bucket. Provide its name in BUCKET in the notebook. The bucket must be in the same Region as this Amazon SageMaker notebook instance.

An AWS Identity and Access Management (IAM) execution role with required permissions. The notebook automatically uses the role you used to create your notebook instance (see the next item in this list). Add the following permissions to this IAM role:

Attach managed policies AmazonAugmentedAIFullAccess and AmazonTranscribeFullAccess.

When you create your role, you specify Amazon S3 permissions. You can either allow that role to access all your resources in Amazon S3, or you can specify particular buckets. Make sure that your IAM role has access to the S3 bucket that you plan to use in this use case. This bucket must be in the same Region as your notebook instance.

An active Amazon SageMaker notebook instance. For more information, see Create a Notebook Instance. Open your notebook instance and upload the notebook A2I-Video-Transcription-with-Amazon-Transcribe.ipynb.

A private work team. A work team is a group of people that you select to review your documents. You can choose to create a work team from a workforce, which is made up of workers engaged through Amazon Mechanical Turk, vendor-managed workers, or your own private workers that you invite to work on your tasks. Whichever workforce type you choose, Amazon A2I takes care of sending tasks to workers. For this post, you create a work team using a private workforce and add yourself to the team to preview the Amazon A2I workflow. For instructions, see Create a Private Workforce. Record the ARN of this work team—you need it in the accompanying Jupyter notebook.

To understand this use case, the following are also recommended:

Basic understanding of AWS services like Amazon Transcribe, its features such as custom vocabularies, and the core components and workflow Amazon A2I uses.

The notebook uses the AWS SDK for Python (Boto3) to interact with these services.

Familiarity with Python and NumPy.

Basic familiarity with Amazon S3.

Getting started

After you complete the prerequisites, you’re ready to deploy this solution entirely on an Amazon SageMaker Jupyter notebook instance. Follow along in the notebook for the complete code.

To start, follow the Setup code cells to set up AWS resources and dependencies and upload the provided sample MP4 video files to your S3 bucket. For this use case, we analyze videos from the official AWS playlist on introductory Amazon SageMaker videos, also available on YouTube. The notebook walks through transcribing and viewing Amazon A2I tasks for a video about Amazon SageMaker Jupyter Notebook instances. In Steps 3 and 4, we analyze results for a larger dataset of four videos. The following table outlines the videos that are used in the notebook, and how they are used.

Video # Video Title File Name Function

1

Fully-Managed Notebook Instances with Amazon SageMaker – a Deep Dive Fully-Managed Notebook Instances with Amazon SageMaker – a Deep Dive.mp4 Perform the initial transcription and viewing sample Amazon A2I jobs in Steps 1 and 2.Build a custom vocabulary in Step 3

2

Built-in Machine Learning Algorithms with Amazon SageMaker – a Deep Dive Built-in Machine Learning Algorithms with Amazon SageMaker – a Deep Dive.mp4 Test transcription with the custom vocabulary in Step 4

3

Bring Your Own Custom ML Models with Amazon SageMaker Bring Your Own Custom ML Models with Amazon SageMaker.mp4 Build a custom vocabulary in Step 3

4

Train Your ML Models Accurately with Amazon SageMaker Train Your ML Models Accurately with Amazon SageMaker.mp4 Test transcription with the custom vocabulary in Step 4

In Step 4, we refer to videos 1 and 3 as the in-sample videos, meaning the videos used to build the custom vocabulary. Videos 2 and 4 are the out-sample videos, meaning videos that our workflow hasn’t seen before and are used to test how well our methodology can generalize to (identify technical terms from) new videos.

Feel free to experiment with additional videos downloaded by the notebook, or your own content.

Step 1: Performing the initial transcription

Our first step is to look at the performance of Amazon Transcribe without custom vocabulary or other modifications and establish a baseline of accuracy metrics.

Use the transcribe function to start a transcription job. You use vocab_name parameter later to specify custom vocabularies, and it’s currently defaulted to None. See the following code:

transcribe(job_names[0], folder_path+all_videos[0], BUCKET)

Wait until the transcription job displays COMPLETED. A transcription job for a 10–15-minute video typically takes up to 5 minutes.

When the transcription job is complete, the results is stored in an output JSON file called YOUR_JOB_NAME.json in your specified BUCKET. Use the get_transcript_text_and_timestamps function to parse this output and return several useful data structures. After calling this, all_sentences_and_times has, for each transcribed video, a list of objects containing sentences with their start time, end time, and confidence score. To save those to a text file for use later, enter the following code:

file0 = open("originaltranscript.txt","w") for tup in sentences_and_times_1: file0.write(tup['sentence'] + "\n") file0.close()

To look at the distribution of confidence scores, enter the following code:

from matplotlib import pyplot as plt plt.style.use('ggplot') flat_scores_list = all_scores[0] plt.xlim([min(flat_scores_list)-0.1, max(flat_scores_list)+0.1]) plt.hist(flat_scores_list, bins=20, alpha=0.5) plt.title('Plot of confidence scores') plt.xlabel('Confidence score') plt.ylabel('Frequency') plt.show()

The following graph illustrates the distribution of confidence scores.

Next, we filter out the high confidence scores to take a closer look at the lower ones.

You can experiment with different thresholds to see how many words fall below that threshold. For this use case, we use a threshold of 0.4, which corresponds to 16 words below this threshold. Sequences of words with a term under this threshold are sent to human review.

As you experiment with different thresholds and observe the number of tasks it creates in the Amazon A2I workflow, you can see a tradeoff between the number of mis-transcriptions you want to catch and the amount of time and resources you’re willing to devote to corrections. In other words, using a higher threshold captures a greater percentage of mis-transcriptions, but it also increases the number of false positives—low-confidence transcriptions that don’t actually contain any important technical term mis-transcriptions. The good news is that you can use this workflow to quickly experiment with as many different threshold values as you’d like before sending it to your workforce for human review. See the following code:

THRESHOLD = 0.4 # Filter scores that are less than THRESHOLD all_bad_scores = [i for i in flat_scores_list if i < THRESHOLD] print(f"There are {len(all_bad_scores)} words that have confidence score less than {THRESHOLD}") plt.xlim([min(all_bad_scores)-0.1, max(all_bad_scores)+0.1]) plt.hist(all_bad_scores, bins=20, alpha=0.5) plt.title(f'Plot of confidence scores less than {THRESHOLD}') plt.xlabel('Confidence score') plt.ylabel('Frequency') plt.show()

You get the following output:

There are 16 words that have confidence score less than 0.4

The following graph shows the distribution of confidence scores less than 0.4.

As you experiment with different thresholds, you can see a number of words classified with low confidence. As we see later, terms that are specific to highly technical domains are more difficult to automatically transcribe in general, so it’s important that we capture these terms and incorporate them into our custom vocabulary.

Step 2: Creating human review workflows with Amazon A2I

Our next step is to create a human review workflow (or flow definition) that sends low confidence scores to human reviewers and retrieves the corrected transcription they provide. The accompanying Jupyter notebook contains instructions for the following steps:

Create a workforce of human workers to review predictions. For this use case, creating a private workforce enables you to send Amazon A2I human review tasks to yourself so you can preview the worker UI.

Create a work task template that is displayed to workers for every task. The template is rendered with input data you provide, instructions to workers, and interactive tools to help workers complete your tasks.

Create a human review workflow, also called a flow definition. You use the flow definition to configure details about your human workforce and the human tasks they are assigned.

Create a human loop to start the human review workflow, sending data for human review as needed. In this example, you use a custom task type and start human loop tasks using the Amazon A2I Runtime API. Each time StartHumanLoop is called, a task is sent to human reviewers.

In the notebook, you create a human review workflow using the AWS Python SDK (Boto3) function create_flow_definition. You can also create human review workflows on the Amazon SageMaker console.

Setting up the worker task UI

Amazon A2I uses Liquid, an open-source template language that you can use to insert data dynamically into HTML files.

In this use case, we want each task to enable a human reviewer to watch a section of the video where low confidence words appear and transcribe the speech they hear. The HTML template consists of three main parts:

A video player with a replay button that only allows the reviewer to play the specific subsection

A form for the reviewer to type and submit what they hear

Logic written in JavaScript to give the replay button its intended functionality

The following code is the template you use:

<head> <style> h1 { color: black; font-family: verdana; font-size: 150%; } </style> </head> <script src="https://assets.crowd.aws/crowd-html-elements.js"></script> <crowd-form> <video id="this_vid"> <source src="" type="audio/mp4"> Your browser does not support the audio element. </video> <br /> <br /> <crowd-button onclick="onClick(); return false;"><h1> Click to play video section!</h1></crowd-button> <h3>Instructions</h3> <p>Transcribe the audio clip </p> <p>Ignore "umms", "hmms", "uhs" and other non-textual phrases. </p> <p>The original transcript is <strong>""</strong>. If the text matches the audio, you can copy and paste the same transcription.</p> <p>Ignore "umms", "hmms", "uhs" and other non-textual phrases. If a word is cut off in the beginning or end of the video clip, you do NOT need to transcribe that word. You also do NOT need to transcribe punctuation at the end of clauses or sentences. However, apostrophes and punctuation used in technical terms should still be included, such as "Denny's" or "file_name.txt"</p> <p><strong>Important:</strong> If you encounter a technical term that has multiple words, please <strong>hyphenate</strong> those words together. For example, "k nearest neighbors" should be transcribed as "k-nearest-neighbors."</p> <p>Click the space below to start typing.</p> <full-instructions header="Transcription Instructions"> <h2>Instructions</h2> <p>Click the play button and listen carefully to the audio clip. Type what you hear in the box below. Replay the clip by clicking the button again, as many times as needed.</p> </full-instructions> </crowd-form> <script> var video = document.getElementById('this_vid'); video.onloadedmetadata = function() { video.currentTime = ; }; function onClick() { video.pause(); video.currentTime = ; video.play(); video.ontimeupdate = function () { if (video.currentTime >= ) { video.pause() } } } </script>

The field allows you to grant access to and display a video to workers using a path to the video’s location in an S3 bucket. To prevent the reviewer from navigating to irrelevant sections of the video, the controls parameter is omitted from the video tag and a single replay button is included to control which section can be replayed.

Under the video player, the <crowd-text-area> HTML tag creates a submission form that your reviewer uses to type and submit.

At the end of the HTML snippet, the section enclosed by the <script> tag contains the JavaScript logic for the replay button. The and fields allow you to inject the start and end times of the video subsection you want transcribed for the current task.

You create a worker task template using the AWS Python SDK (Boto3) function create_human_task_ui. You can also create a human task template on the Amazon SageMaker console.

Creating human loops

After setting up the flow definition, we’re ready to use Amazon Transcribe and initiate human loops. While iterating through the list of transcribed words and their confidence scores, we create a human loop whenever the confidence score is below some threshold, CONFIDENCE_SCORE_THRESHOLD. A human loop is just a human review task that allows workers to review the clips of the video that Amazon Transcribe had difficulty with.

An important thing to consider is how we deal with a low-confidence word that is part of a phrase that was also mis-transcribed. To handle these cases, you use a function that gets the sequence of words centered about a given index, and the sequence’s starting and ending timestamps. See the following code:

def get_word_neighbors(words, index): """ gets the words transcribe found at most 3 away from the input index Returns: list: words at most 3 away from the input index int: starting time of the first word in the list int: ending time of the last word in the list """ i = max(0, index - 3) j = min(len(words) - 1, index + 3) return words[i: j + 1], words[i]["start_time"], words[j]["end_time"]

For every word we encounter with low confidence, we send its associated sequence of neighboring words for human review. See the following code:

human_loops_started = [] CONFIDENCE_SCORE_THRESHOLD = THRESHOLD i = 0 for obj in confidences_1: word = obj["content"] neighbors, start_time, end_time = get_word_neighbors(confidences_1, i) # Our condition for when we want to engage a human for review if (obj["confidence"] < CONFIDENCE_SCORE_THRESHOLD): # get the original sequence of words sequence = "" for block in neighbors: sequence += block['content'] + " " humanLoopName = str(uuid.uuid4()) # "initialValue": word, inputContent = { "filePath": job_uri_s3, "start_time": start_time, "end_time": end_time, "original_words": sequence } start_loop_response = a2i.start_human_loop( HumanLoopName=humanLoopName, FlowDefinitionArn=flowDefinitionArn, HumanLoopInput={ "InputContent": json.dumps(inputContent) } ) human_loops_started.append(humanLoopName) # print(f'Confidence score of {obj["confidence"]} is less than the threshold of {CONFIDENCE_SCORE_THRESHOLD}') # print(f'Starting human loop with name: {humanLoopName}') # print(f'Sending words from times {start_time} to {end_time} to review') print(f'The original transcription is ""{sequence}"" \n') i=i+1

For the first video, you should see output that looks like the following code:

========= Fully-Managed Notebook Instances with Amazon SageMaker - a Deep Dive.mp4 ========= The original transcription is "show up Under are easy to console " The original transcription is "And more cores see is compute optimized " The original transcription is "every version of Annecy two instance is " The original transcription is "distributing data sets wanted by putt mode " The original transcription is "onto your EBS volumes And again that's " The original transcription is "of those example No books are open " The original transcription is "the two main ones markdown is gonna " The original transcription is "I started using Boto three but I " The original transcription is "absolutely upgrade on bits fun because you " The original transcription is "That's the python Asi que We're getting " The original transcription is "the Internet s Oh this is from " The original transcription is "this is from Sarraf He's the author " The original transcription is "right up here then the title of " The original transcription is "but definitely use Lambda to turn your " The original transcription is "then edit your ec2 instance or the " Number of tasks sent to review: 15

As you’re completing tasks, you should see these mis-transcriptions with the associated video clips. See the following screenshot.

Human loop statuses that are complete display Completed. It’s not required to complete all human review tasks before continuing. Having 3–5 finished tasks is typically sufficient to see how technical terms can be extracted from the results. See the following code:

completed_human_loops = [] for human_loop_name in human_loops_started: resp = a2i.describe_human_loop(HumanLoopName=human_loop_name) print(f'HumanLoop Name: {human_loop_name}') print(f'HumanLoop Status: {resp["HumanLoopStatus"]}') print(f'HumanLoop Output Destination: {resp["HumanLoopOutput"]}') print('\n') if resp["HumanLoopStatus"] == "Completed": completed_human_loops.append(resp)

When all tasks are complete, Amazon A2I stores results in your S3 bucket and sends an Amazon CloudWatch event (you can check for these on your AWS Management Console). Your results should be available in the S3 bucket OUTPUT_PATH when all work is complete. You can print the results with the following code:

import re import pprint pp = pprint.PrettyPrinter(indent=4) for resp in completed_human_loops: splitted_string = re.split('s3://' + BUCKET + '/', resp['HumanLoopOutput']['OutputS3Uri']) output_bucket_key = splitted_string[1] response = s3.get_object(Bucket=BUCKET, Key=output_bucket_key) content = response["Body"].read() json_output = json.loads(content) pp.pprint(json_output) print('\n')

Step 3: Improving transcription using custom vocabulary

You can use the corrected transcriptions from our human reviewers to parse the results to identify the domain-specific terms you want to add to a custom vocabulary. To get a list of all human-reviewed words, enter the following code:

corrected_words = [] for resp in completed_human_loops: splitted_string = re.split('s3://' + BUCKET + '/', resp['HumanLoopOutput']['OutputS3Uri']) output_bucket_key = splitted_string[1] response = s3.get_object(Bucket=BUCKET, Key=output_bucket_key) content = response["Body"].read() json_output = json.loads(content) # add the human-reviewed answers split by spaces corrected_words += json_output['humanAnswers'][0]['answerContent']['transcription'].split(" ")

We want to parse through these words and look for uncommon English words. An easy way to do this is to use a large English corpus and verify if our human-reviewed words exist in this corpus. In this use case, we use an English-language corpus from Natural Language Toolkit (NLTK), a suite of open-source, community-driven libraries for natural language processing research. See the following code:

# Create dictionary of English words # Note that this corpus of words is not 100% exhaustive import nltk nltk.download('words') from nltk.corpus import words my_dict=set(words.words()) word_set = set([]) for word in remove_contractions(corrected_words): if word: if word.lower() not in my_dict: if word.endswith('s') and word[:-1] in my_dict: print("") elif word.endswith("'s") and word[:-2] in my_dict: print("") else: word_set.add(word) for word in word_set: print(word)

The words you find may vary depending on which videos you’ve transcribed and what threshold you’ve used. The following code is an example of output from the Amazon A2I results of the first and third videos from the playlist (see the Getting Started section earlier):

including machine-learning grabbing amazon boto3 started t3 called sarab ecr using ebs internet jupyter distributing opt/ml optimized desktop tokenizing s3 sdk encrypted relying sagemaker datasets upload iam gonna managing wanna vpc managed mars.r ec2 blazingtext

With these technical terms, you can now more easily manually create a custom vocabulary of those terms that we want Amazon Transcribe to recognize. You can use a custom vocabulary table to tell Amazon Transcribe how each technical term is pronounced and how it should be displayed. For more information on custom vocabulary tables, see Create a Custom Vocabulary Using a Table.

While you process additional videos on the same topic, you can keep updating this list, and the number of new technical terms you have to add will likely decrease each time you get a new video.

We built a custom vocabulary (see the following code) using parsed Amazon A2I results from the first and third videos with a 0.5 THRESHOLD confidence value. You can use this vocabulary for the rest of the notebook:

finalized_words=[['Phrase','IPA','SoundsLike','DisplayAs'], # This top line denotes the column headers of the text file. ['machine-learning','','','machine learning'], ['amazon','','am-uh-zon','Amazon'], ['boto-three','','boe-toe-three','Boto3'], ['T.-three','','tee-three','T3'], ['Sarab','','suh-rob','Sarab'], ['E.C.R.','','ee-see-are','ECR'], ['E.B.S.','','ee-bee-ess','EBS'], ['jupyter','','joo-pih-ter','Jupyter'], ['opt-M.L.','','opt-em-ell','/opt/ml'], ['desktop','','desk-top','desktop'], ['S.-Three','','ess-three','S3'], ['S.D.K.','','ess-dee-kay','SDK'], ['sagemaker','','sage-may-ker','SageMaker'], ['mars-dot-r','','mars-dot-are','mars.R'], ['I.A.M.','','eye-ay-em','IAM'], ['V.P.C.','','','VPC'], ['E.C.-Two','','ee-see-too','EC2'], ['blazing-text','','','BlazingText'], ]

After saving your custom vocabulary table to a text file and uploading it to an S3 bucket, create your custom vocabulary with a specified name so Amazon Transcribe can use it:

# The name of your custom vocabulary must be unique! vocab_improved='sagemaker-custom-vocab' transcribe = boto3.client("transcribe") response = transcribe.create_vocabulary( VocabularyName=vocab_improved, LanguageCode='en-US', VocabularyFileUri='s3://' + BUCKET + '/' + custom_vocab_file_name ) pp.pprint(response)

Wait until the VocabularyState displays READY before continuing. This typically takes up to a few minutes. See the following code:

# Wait for the status of the vocab you created to finish while True: response = transcribe.get_vocabulary( VocabularyName=vocab_improved ) status = response['VocabularyState'] if status in ['READY', 'FAILED']: print(status) break print("Not ready yet...") time.sleep(5)

Step 4: Improving transcription using custom vocabulary

After you create your custom vocabulary, you can call your transcribe function to start another transcription job, this time with your custom vocabulary. See the following code:

job_name_custom_vid_0='AWS-custom-0-using-' + vocab_improved + str(time_now) job_names_custom = [job_name_custom_vid_0] transcribe(job_name_custom_vid_0, folder_path+all_videos[0], BUCKET, vocab_name=vocab_improved)

Wait for the status of your transcription job to display COMPLETED again.

Write the new transcripts to new .txt files with the following code:

# Save the improved transcripts i = 1 for list_ in all_sentences_and_times_custom: file = open(f"improved_transcript_{i}.txt","w") for tup in list_: file.write(tup['sentence'] + "\n") file.close() i = i + 1

Results and analysis

Up to this point, you may have completed this use case with a single video. The remainder of this post refers to the four videos that we used to analyze the results of this workflow. For more information, see the Getting Started section at the beginning of this post.

To analyze metrics on a larger sample size for this workflow, we generated a ground truth transcript in advance, a transcription before the custom vocabulary, and a transcription after the custom vocabulary for each video in the playlist.

The first and third videos are the in-sample videos used to build the custom vocabulary you saw earlier. The second and fourth videos are used as out-sample videos to test Amazon Transcribe again after building the custom vocabulary. Run the associated code blocks to download these transcripts.

Comparing word error rates

The most common metric for speech recognition accuracy is called word error rate (WER), which is defined to be WER =(S+D+I)/N, where S, D, and I are the number of substitution, deletion, and insertion operations, respectively, needed to get from the outputted transcript to the ground truth, and N is the total number of words. This can be broadly interpreted to be the proportion of transcription errors relative to the number of words that were actually said.

We use a lightweight open-source Python library called JiWER for calculating WER between transcripts. See the following code:

!pip install jiwer from jiwer import wer import jiwer

For more information, see JiWER: Similarity measures for automatic speech recognition evaluation.

We calculate our metrics for the in-sample videos (the videos that were used to build the custom vocabulary). Using the code from the notebook, the following code is the output:

===== In-sample videos ===== Processing video #1 The baseline WER (before using custom vocabularies) is 5.18%. The WER (after using custom vocabularies) is 2.62%. The percentage change in WER score is -49.4%. Processing video #3 The baseline WER (before using custom vocabularies) is 11.94%. The WER (after using custom vocabularies) is 7.84%. The percentage change in WER score is -34.4%.

To calculate our metrics for the out-sample videos (the videos that Amazon Transcribe hasn’t seen before), enter the following code:

===== Out-sample videos ===== Processing video #2 The baseline WER (before using custom vocabularies) is 7.55%. The WER (after using custom vocabularies) is 6.56%. The percentage change in WER score is -13.1%. Processing video #4 The baseline WER (before using custom vocabularies) is 10.91%. The WER (after using custom vocabularies) is 8.98%. The percentage change in WER score is -17.6%.

Reviewing the results

The following table summarizes the changes in WER scores.

If we consider absolute WER scores, the initial WER of 5.18%, for instance, might be sufficiently low for some use cases—that’s only around 1 in 20 words that are mis-transcribed! However, this rate can be insufficient for other purposes, because domain-specific terms are often the least common words spoken (relative to frequent words such as “to,” “and,” or “I”) but the most commonly mis-transcribed. For applications like search engine optimization (SEO) and video organization by topic, you may want to ensure that these technical terms are transcribed correctly. In this section, we look at how our custom vocabulary impacted the transcription rates of several important technical terms.

Metrics for specific technical terms

For this post, ground truth refers to the true transcript that was transcribed by hand, original transcript refers to the transcription before applying the custom vocabulary, and new transcript refers to the transcription after applying the custom vocabulary.